Classifier neural networks are often used to detect malware, such as worms. But what is a classifier, and how does it work?

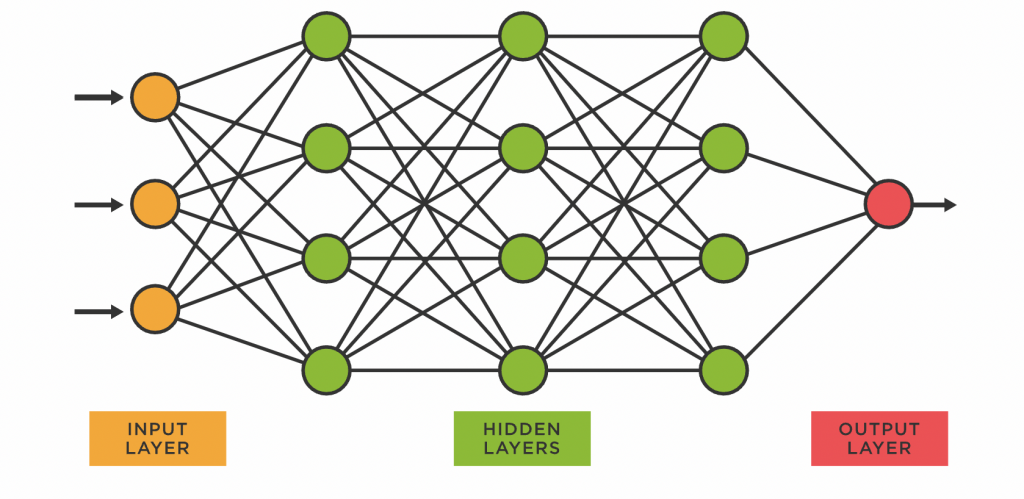

To explain what a classifier is, we need to first explore the basics on neural networks. A neural network is an algorithm that is somewhat based on the human brain. It is composed of layers of neurons, with each neuron connecting with each neuron in the next layer. The first layer is the input layer and is where the input data is split into data called features. Each neuron in the input layer is assigned one feature to process. The last layer is the output layer and is made up of output neurons. The final result that is held within these neurons determines the output of the neural network. For example, each output neuron is assigned one of the classes the model is predicting from. The neuron with the highest final value in the output layer is what determines the prediction of the model. In between the input and output layers are the hidden layers, which adds extra layers of processing and allow the model to understand complex patterns and make its prediction. This network of individual neurons in layers is why we call this algorithm a neural network.

The model works by evaluating what’s known as a forward pass. This essentially means that is taking the input feature, processing it, and sending it to the next layer of neurons until we reach the output layer. Each neuron processes the data in a simple way: it multiplies each input it receives by a weight. Then, it adds all these weighted inputs and adds a bias term. This number is sent to an activation function, such as arctangent or ReLu ( ReLu is just a piecewise function that is 0 if x<0 and x if x>0). This final result is what determines the value of the neuron. The neuron then passes this value to each of the neurons in the next layer. It repeats the cycle until the values for the output layer are found, which determine the class the model is predicting. When the forward pass reaches the output layer, the values in the output neurons are often normalized to represent the probability that the data belongs to that particular class.

In order to make these predictions and learn the patterns in the data, the model has to find the specific set of weights and biases, for each neuron, which results in the highest accuracy. It does this by a process known as backpropagation, which essentially works its way backwards from the known output and input to determine the proper weights and biases from the last layers to the first ones. This process is lengthy and computationally intensive but is essentially how neural networks are trained. Labelled pairs of data (data in which we tell the model which class it should predict) are inputted, and the model backpropagates to learn the weights and biases. The amount of data needed varies, but tends to be huge in order to maximize accuracy.

After this process, we will have a trained classifier model. We input the features of the data, such as for example data on a potential malicious application, into the model, the model performs a forward pass, and the neuron that has the highest value at the end represents which class the model thinks the data belongs to. For a classifier network to detect malware, the input features might be large, requiring hundreds of input neurons, but in the end, there’s only 2 output neurons to indicate whether the software is malicious or not.